In security, history tends to rhyme: the biggest companies don’t show up during a tech shift — they show up after.

AWS launched the cloud in 2006. Wiz didn’t arrive until 2020.

Vendor outsourcing led to SOC 2 compliance in 2010. Drata* launched in 2021.

DevOps took off with GitHub (2008) and Docker (2013). Snyk followed in 2015.

Why the delay? Because security doesn’t lead—it responds to where attackers go first. But with AI, the pattern is accelerating.

AI adoption is moving faster than any other tech wave before. And it doesn’t just increase the attack surface, it gives bad actors tools to launch a higher number AND more sophisticated attacks.

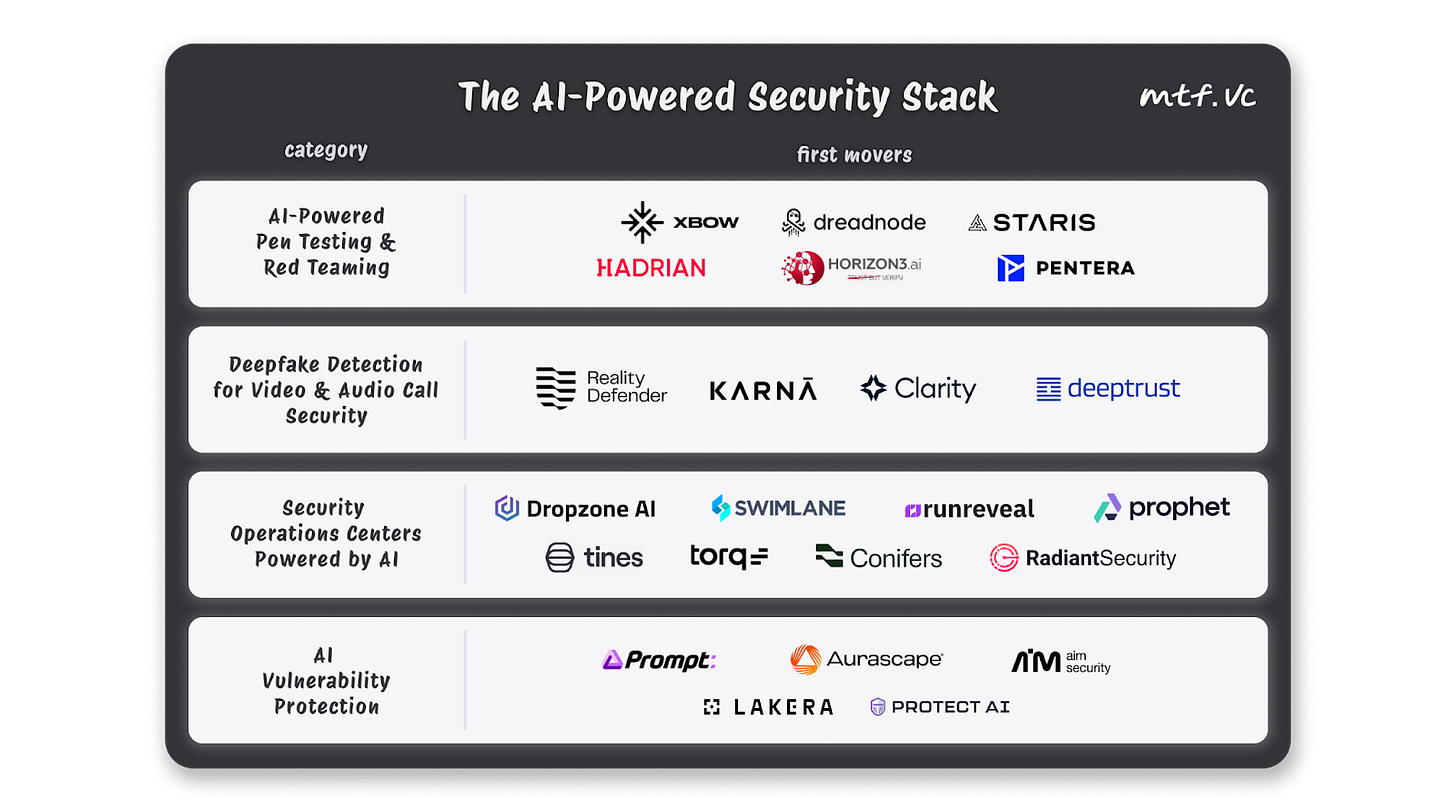

In this post, I break down 4 ways security teams can use AI to fight fire with fire:

1/ Pen Testing and Red Teaming at Machine Speed

Penetration testing is standard practice. Most companies do it to check a compliance box—test for known vulnerabilities, generate a report, move on.

But pen tests are narrow, predictable, and scheduled. They don’t reflect how attackers actually behave, and they definitely don’t keep up with AI-powered threats.

Red teaming is meant to fill that gap. It simulates real-world attacks—phishing, lateral movement, evasion—and tests how well your security team detects and responds. But even red teaming has a problem: it’s manual, slow, and typically run once a year. Meanwhile, attacks are constant.

Now, that’s starting to change. A new generation of tools is emerging that automate both pen testing and red teaming—running continuously, simulating real threats, and probing complex systems, especially those built on LLMs or other AI components.

The “run continuously” point is particularly compelling. Today, pen tests and red teaming exercises are once-in-a-while, services-based activities. If software could run both continuously, that could rapidly grow both markets.

Over the last few months, I’ve met with at least 10 strong teams building AI-based pen testing and red teaming solutions. While early, XBOW has strong buzz and a number of security leaders have pointed to companies like Hadrian, Horizon3, Staris, Pentera, and Dreadnode as promising. As expected, many teams in Israel are looking at this market and building in stealth quickly.

The biggest question for these startups is whether to go after the low-cost, high-efficiency use case tied to compliance, or build high-end software that can act as an “NSA agent in-house” mirroring the most advanced attackers and working for you 24/7.

The reality is, most companies don’t have budget for the latter, as most customer and compliance demands are for pen tests not red teaming exercises. However, red teaming generally commands a higher ACV given the increased sophistication.

The positioning, product, and price point look different for each, and I suspect startups will be forced to choose where to compete.

There will be room for multiple winners, and from what I can tell, no one is at scale yet. I’d love to see more startups taking on pen testing and red teaming with AI.

2/ Spotting and Defending Against Deepfakes

In May 2024, an AI-generated video call made headlines when a UK engineering firm called Arup was tricked into sending $25M to attackers in a deepfake scam.

Suddenly, deepfakes went from theory to real-life threat.

Attackers are starting to use AI-generated voices, faces, and full personas to impersonate executives, trick employees, and bypass security protocols.

In fact, in the second half of 2024, video-based attacks (or “vishing attacks”) increased 442% over the first half of the year.

Forget phishing emails. Imagine getting a real-time video call from your "Head of IT" asking for sensitive access. It’s going to be really hard to train employees against these kinds of attacks.

The most compelling startup I’ve seen in this space is DeepTrust*. They protect video and audio calls against phishing, social engineering, and deepfake attacks in real-time. Focusing on video and call security, much like Abnormal focuses on email security, has made them stand-out as a workflow-first vs. tech-first solution for security teams.

DeepTrust is giving security teams the ammo they need to keep employees safe. Others building deepfake detection solutions include Reality Defender, Clarity, and Karna.

Today, defending against deepfakes has the most urgency in finance and highly sensitive industries—FinTechs, banks, insurance companies, and healthcare companies are the most vulnerable. When money or highly sensitive information is involved, the stakes will be too high to leave employees without protection as these attacks grow in number and sophistication.

3/ The AI-Powered SOC Stack

Security operations teams are outmatched. Too many alerts, not enough humans, and systems growing more complex by the day.

What happens when attackers ramp their use of AI to dramatically increase the number of attacks? It’s not a pretty picture.

This is where AI has a real shot to flip the script. The SOC can evolve from a human-led, tool-heavy model into something faster, leaner, and smarter: a system that detects, analyzes, and even remediates threats with minimal human input.

We’re already seeing pieces of this future:

Detection-as-code powered by LLMs that summarize, write, and tune rules;

Alert triage that actually understands context, not just keywords; and,

Incident summaries generated in seconds.

Companies like Dropzone AI, Prophet Security, Radiant Security, and Conifers are all building AI SOC automations. Today, much of their work is human-assisted. Over time, as agents are trusted with more complex tasks, the security operations center can increasingly be run by specialized AI SOC agents.

The independent AI SOC companies aren’t the only ones building agents here. Companies like RunReveal*, whose core product is a security data platform, have created MCP servers delivering AI SOC capabilities on top of the SIEM data they already manage.

There are also players like Torq, Tines, and Swimlane that allow for a broader set of workflow automations.

Given the pressures already facing security operations centers including lack of automation, lack of skilled staff, and high turnover, adding more attacks from AI-powered adversaries will only challenge things further. This is a space I’m following closely and will create a number of meaningful startup opportunities.

4/ AI Vulnerability Management

You can’t patch what you don’t know—and AI can break things in ways unseen before.

With AI, the attack surface expands. Traditional tools scan for CVEs, outdated libraries, and misconfigurations. But they don’t account for prompt injection, model inversion, data leakage, or training data poisoning—all of which are uniquely AI-native failure modes. That’s why we’re seeing the rise of AI vulnerability management platforms—tools purpose-built to test and monitor LLMs, embeddings, fine-tuned models, and the custom logic that surrounds them.

We’ve already seen a number of startups come out of the gate with significant funding to protect against AI vulnerabilities. Aurascape quickly raised over $60M, Protect AI over $100M, Lakera $30M, Aim Security $28M, and Prompt Security over $20M.

This space is still early. We need to see more AI apps in production before it becomes a top focus for most security teams. However, the adoption curve for AI tools is like nothing we’ve seen before (Cursor, Windsurf, Clay, Harvey, Mercor… not to mention enterprise Claude and ChatGPT) so we may see a swift shift towards AI security as a higher priority for CISOs.

As AI vulnerabilities increase the attack surface and number of attacks, I suspect we’ll see an increase in AI agents not just prioritizing alerts, but suggesting and implementing fixes. Rick Doten, CISO at Carolina Complete Health, in this talk describes the agentic future as security teams having access to “unlimited interns with unlimited time” with humans playing the role of master orchestrator or manager. It’s a future we can already see glimpses of today.

The Coming Wave of AI Security Unicorns

Despite the crazy funding rounds and number of users of ChatGPT, AI in the enterprise is still early. And the tools to protect against AI-powered adversaries and the expanded attack surface have yet to see their full market potential.

We’re also very much in copilot mode. Security teams aren’t ready to (nor should they) trust AI autonomously. Especially with action-oriented tasks like closing out tickets or patching. I predict we’ll be in assist—not replace—mode for some time.

The next generation of security unicorns will have founders that understand BOTH security and AI. Understanding how to build a high-functioning agent, or defend against AI attacks, requires a deep understanding of modern AI. I’m excited to see the next generation of security founders tackle the space with this AI-first mindset.

*Company that either personally or professionally invested in.

Awesome article!

Beyond the Battlefield: Unpacking the Overlooked Layers of AI Security https://www.netizen.page/2025/05/beyond-battlefield-unpacking-overlooked.html